Multithreaded Servers¶

Threads are applied to serve requests from multiple clients who make their requests concurrently.

Serving Multiple Clients¶

In client/server computing, a server provides a hardware or a software service and clients make requests to the server. Typically, clients and servers run on different computers. The operations in server side scripting are

- define addresses, port numbers, sockets,

- wait to accept client requests,

- service the requests of clients.

For multiple clients, the server could either

- wait till every one is connected before serving, or

- accept connections and serve one at a time.

In either protocol, clients are blocked waiting.

Imagine a printer shared between multiple users connected in a computer network. Handling a request to print could include:

- accepting path name of the file,

- verifying whether the file exists,

- placing request in the printer queue.

Each of these three stages involves network communication with possible delays during which other request could be handled. A multithreaded print server has multiple handlers running simultaneously.

Imagine an automated bank teller:

- ask for account number,

- client must provide password,

- prompt for menu selection, etc...

Each of these questions may lead to extra delays during which other clients could be serviced. Running multiple threads on server will utilize the time server is idle waiting for one client for the benefit of the request of other clients.

In an object oriented implementation of a multithreaded server, we will use sockets and threads. The main program will

- define address, port numbers, server socket,

- launch the handler threads of the server.

Typically we will have as many handler threads as

the number of connections the server listens to.

Inheriting from the class Thread,

- the constructor of the handler will store a reference to the socket server as data attribute,

- the

runcontains the code to accept connections and handle requests.

Locks are needed to manage shared resources safely.

Waiting for Data from 3 Clients¶

As our first example of a multithreaded server, consider a server conducting a poll. Suppose 3 clients send a message to a server. Clients behave as follows:

- may connect at any time with the server,

- getting message typed in takes time.

The multithreaded server listens to 3 clients:

- three threads can handle requests,

- each thread simply receives message,

- server closes after three threads are done.

We run the server script mtserver.py in a terminal window.

A session could run as follows.

$ python mtserver.py

give the number of clients : 3

server is ready for 3 clients

server starts 3 threads

0 accepted request from ('127.0.0.1', 49153)

0 waits for data

1 accepted request from ('127.0.0.1', 49154)

1 waits for data

1 received this is B

0 received this is A

2 accepted request from ('127.0.0.1', 49155)

2 waits for data

2 received this is C

$

The clients run simultaneously in separate terminal windows.

$ python mtclient.py

client is connected

Give message : this is A

$

$ python mtclient.py

client is connected

Give message : this is B

$

$ python mtclient.py

client is connected

Give message : this is C

$

The client sends a message to the server

and the code in the script mtclient.py is below.

from socket import socket as Socket

from socket import AF_INET, SOCK_STREAM

HOSTNAME = 'localhost' # on same host

PORTNUMBER = 11267 # same port number

BUFFER = 80 # size of the buffer

SERVER_ADDRESS = (HOSTNAME, PORTNUMBER)

CLIENT = Socket(AF_INET, SOCK_STREAM)

CLIENT.connect(SERVER_ADDRESS)

print('client is connected')

DATA = input('Give message : ')

CLIENT.send(DATA.encode())

CLIENT.close()

The main() in mtserver.py

first defines the server socket.

The definition of the server socket to serve a number

of clients happens in the function connect()``.

Every client is served by a different thread.

After the execution of the connect(),

the handler threads are launched.

def main():

"""

Prompts for number of connections,

starts the server and handler threads.

"""

nbr = int(input('give the number of clients : '))

buf = 80

server = connect(nbr)

print('server is ready for %d clients' % nbr)

handlers = []

for i in range(nbr):

handlers.append(Handler(str(i), server, buf))

print('server starts %d threads' % nbr)

for handler in handlers:

handler.start()

print('waiting for all threads to finish ...')

for handler in handlers:

handler.join()

server.close()

It is important that before the server.close()

we wait for all handler threads to finish.

The connect() is defined below.

def connect(nbr):

"""

Connects a server to listen to nbr clients.

Returns the server socket.

"""

hostname = '' # to use any address

portnumber = 11267 # number for the port

server_address = (hostname, portnumber)

server = Socket(AF_INET, SOCK_STREAM)

server.bind(server_address)

server.listen(nbr)

return server

A handler thread is modeled by an object of

the class Handler, inheriting from the Thread class.

The structure of the Handler class is listed below.

from threading import Thread

class Handler(Thread):

"""

Defines handler threads.

"""

def __init__(self, n, sock, buf):

"""

Name of handler is n, server

socket is sock, buffer size is buf.

"""

def run(self):

"""

Handler accepts connection,

prints message received from client.

"""

The object data attributes for each handler are server socket and buffer size.

def __init__(self, n, sock, buf):

"""

Name of handler is n, server

socket is sock, buffer size is buf.

"""

Thread.__init__(self, name=n)

self.srv = sock

self.buf = buf

The run method in a Handler thread defines

the handling of one client.

def run(self):

"""

Handler accepts connection,

prints message received from client.

"""

handler = self.getName()

server = self.srv

buffer = self.buf

client, client_address = server.accept()

print(handler + ' accepted request from ', \

client_address)

print(handler + ' waits for data')

data = client.recv(buffer).decode()

print(handler + ' received ', data)

n Handler Threads for m Client Requests¶

Consider the following situation:

- \(n\) threads are available to handle requests,

- a total number of \(m\) requests will be handled,

- \(m\) is always larger than \(n\).

As an example application, consider a alling center with 8 operators, paid to handle 100 calls per day. Some operators handle few calls that take long time. Other operators handle many short calls. An application in computational science is dynamic load balancing, where one has to handle a number of jobs with unknown duration for each job.

In our previous multithreaded server, after starting the threads, the following three steps were executed:

- The request of a client is accepted by one handler.

- The request is handled by a thread.

- After handling request, the thread dies.

In this simple protocol, the server is blocked waiting till all threads have received and handled their request.

To handle \(m\) requests by \(n\) server threads:

- The server checks the status of thread

twitht.isAlive(). - If the thread is dead, then increase the counter of requests handled, and start a new thread if number of requests still to be handled is larger than number of live threads.

Running the server mtnserver.py can happen as below.

$ python mtnserver.py

give the number of clients : 3

give the number of requests : 7

server is ready for 3 clients

server starts 3 threads

0 is alive, cnt = 0

1 is alive, cnt = 0

2 is alive, cnt = 0

0 accepted request from ('127.0.0.1', 49234)

0 waits for data

0 is alive, cnt = 0

1 is alive, cnt = 0

2 is alive, cnt = 0

0 received 1

1 accepted request from ('127.0.0.1', 49235)

1 waits for data

0 handled request 1

restarting 0

1 is alive, cnt = 1

2 is alive, cnt = 1

1 received 2

0 is alive, cnt = 1

1 handled request 2

restarting 1

2 is alive, cnt = 2

... etcetera ...

The code to manage handler threads

has the following modified main().

def main():

"""

Prompts for the number of connections,

starts the server and the handler threads.

"""

ncl = int(input('give the number of clients : '))

mrq = int(input('give the number of requests : '))

server = connect(ncl)

print('server is ready for %d clients' % ncl)

handlers = []

for i in range(ncl):

handlers.append(Handler(str(i), server, 80))

print('server starts %d threads' % ncl)

for handler in handlers:

handler.start()

manage(handlers, mrq, server)

server.close()

A thread cannot be restarted,

but we can create a new thread with same name.

Recall that the server maintains a list of threads.

While we cannot restart the same thread,

we can create a new thread in the place of a deleted thread,

as done by the function restart below.

def restart(threads, sck, i):

"""

Deletes the i-th dead thread from threads

and inserts a new thread at position i.

The socket server is sck. Returns new threads.

"""

del threads[i]

threads.insert(i, Handler(str(i), sck, 80))

threads[i].start()

return threads

The restart() is called in the function below.

def manage(threads, mrq, sck):

"""

Given a list of threads, restarts threads

until mrq requests have been handled.

The socket server is sck.

"""

cnt = 0

nbr = len(threads)

dead = []

while cnt < mrq:

sleep(2)

for i in range(nbr):

if i in dead:

pass

elif threads[i].isAlive():

print(i, 'is alive, cnt =', cnt)

else:

cnt = cnt + 1

print('%d handled request %d' % (i, cnt))

if cnt >= mrq:

break

elif cnt <= mrq-nbr:

print('restarting', i)

threads = restart(threads, sck, i)

else:

dead.append(i)

Observe that only the main server manipulates the counter, locks are not needed.

Pleasingly Parallel Computations¶

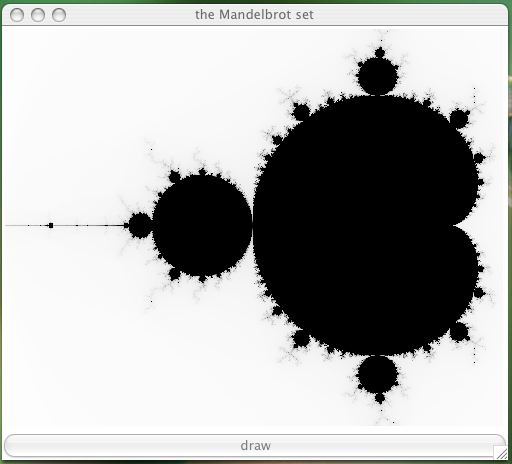

A pleasingly parallel computation is a parallel computation with little or no communication overhead. An example is the computation of the Mandelbrot set, shown in Fig. 93.

Fig. 93 The Mandelbrot set.

The Mandelbrot set is defined by an iterative algorithm:

- A point in the plane with coordinates \((x,y)\) is represented by \(c = x + iy\), \(i = \sqrt{-1}\).

- Starting at \(z = 0\), count the number of iterations of the map \(z \rightarrow z^2 + c\) to reach \(|z| > 2\).

- This number \(k\) of iterations determines the inverted grayscale \(255 - k\) of the pixel with coordinates \((x,y)\) in the plot for \(x \in [-2,0.5]\) and \(y \in [-1,+1]\).

To display on a canvas of 400 pixels high and 500 pixels wide we need 16,652,580 iterations. This computation is pleasingly parallel as different threads on different pixels require no communication.

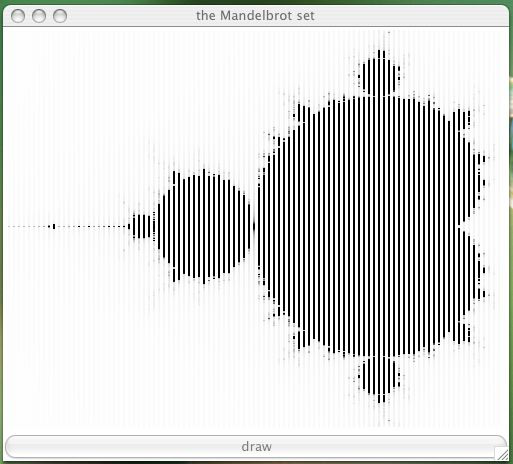

If we use five processors, then each processor computes one fifth of Fig. 93, as shown in Fig. 94.

Fig. 94 One fifth of the Mandelbrot set.

The manager/worker paradigm can be implemented via a client/server parallel computation. The server manages a job queue, e.g.: number of columns on canvas to compute pixel grayscales of. The \(n\) handler threads serve \(n\) clients. The clients perform the computational work, scheduled by the server.

Here we use a simple static workload assignment. Handler thread \(t\) manages all columns \(k\) for which \(k\mod n = t\). For example, for \(n=2\), the first thread takes even columns, while the second thread takes odd columns. A client receives the column number from the server and returns a list of grayscales for each row.

Exercises¶

- Only threads can terminate themselves. Write a script that starts 3 threads. One thread prompts the user to continue or not, the other threads are busy waiting, checking the value of a shared boolean variable. When the user has given the right answer to terminate, the shared variable changes and all threads stop.

- Write a multithreaded server with the capability

to stop all running threads.

All handler threads stop when one client passes

the message

stopto one server thread. - Use a multithreaded server to estimate $pi$ counting the number of samples \((x,y) \in [0,1] \times [0,1]\): \(x^2 + y^2 \leq 1\). The job queue maintained by the server is a list of tuples. Each tuple contains the number of samples and the seed for the random number generator.