Web Clients and Crawlers¶

Web Clients¶

We do not really need Apache to host a web service. The client is a browser, e.g.: Netscape, Firefox, ... We can browse the web using scripts, without a browser.. Why do we want to do this?

- more efficient, without overhead from the GUI,

- in control, we request only what we need,

- crawl the web, conduct a recursive search.

Python provides the urllib and urlparse modules.

For example, consider the retrieval of the weather forecast for Chicago.

$ python3 forecast.py

opening http://tgftp.nws.noaa.gov/data/forecasts/state/il/ilz013.txt ...

Today Sat Sun Mon Tue Wed Thu

Apr 07 Apr 08 Apr 09 Apr 10 Apr 11 Apr 12 Apr 13

Chicago Downtown

SUNNY SUNNY MOCLDY PTCLDY PTCLDY SUNNY MOCLDY

/50 38/67 54/72 56/68 45/54 40/48 42/59

/00 00/00 00/10 30/30 30/10 10/00 20/40

Chicago O'hare

SUNNY SUNNY MOCLDY PTCLDY PTCLDY SUNNY MOCLDY

/55 37/67 54/73 57/69 44/56 39/56 41/60

/00 00/00 10/10 40/40 30/10 10/00 30/40

The script forecast.py is listed below.

from urllib.request import urlopen

HOST = 'http://tgftp.nws.noaa.gov'

FCST = '/data/forecasts/state'

URL = HOST + FCST + '/il/ilz013.txt'

print('opening ' + URL + ' ...\n')

DATA = urlopen(URL)

while True:

LINE = DATA.readline().decode()

if LINE == '':

break

L = LINE.split(' ')

if 'FCST' in L:

LINE = DATA.readline().decode()

print(LINE + DATA.readline().decode())

if 'Chicago' in L:

LINE = LINE + DATA.readline().decode()

LINE = LINE + DATA.readline().decode()

print(LINE + DATA.readline().decode())

The processing of a web page is similar to processing a file.

As example, consider the copying of a web page to a file.

The syntax of the urlretrieve is

urlretrieve( < URL >, < file name > )

For example,

from urllib.request import urlretrieve

urlretrieve('http://www.python.org','wpt.html')

The above statements copy the page at http://www.python.org

to the file wpt.html.

To practice the tools to browse web pages with a script,

we will do the same as urlretrieve does,

reading the page in small increment.

First, we open a web page with urllib.request.urlopen,

its syntax is below.

from urllib.request import urlopen

< object like file > = urlopen( < URL > )

Then we read data, with the application of read method:

data = < object like file >.read( < size > ).decode()

where size is the number of bytes in the buffer.

The read returns a sequence of bytes.

To turn the sequence of bytes into a string,

we have to apply the decode() method.

After reading, we close the page:

< object like file >.close()

The open, read, close methods

are similar to the methods on files.

The opening of a web page is surrounded by an exception handler in the code below.

def main():

"""

Prompts the user for a web page,

a file name, and then starts copying.

"""

from urllib.request import urlopen

print('making a local copy of a web page')

url = input('Give URL : ')

try:

page = urlopen(url)

except:

print('Could not open the page.')

return

name = input('Give file name : ')

copypage(page, name)

The function to copy a web page to a file is defined below.

def copypage(page, file):

"""

Given the URL for the web page,

a copy of its contents is written to file.

Both url and file are strings.

"""

copyfile = open(file, 'w')

while True:

try:

data = page.read(80).decode()

except:

print('Could not decode data.')

break

if data == '':

break

copyfile.write(data)

page.close()

copyfile.close()

Scanning Files¶

The web pages we download are formatted HTML files. Applications to scan an HTML file are for example:

- search for particular information,

for example download all

.pyfiles from the course web site; - navigate to where the page refers to,

for example, retrieve all URLs the page

www.python.orgrefers to.

What is common between these two examples:

.py files and URLs appear between

double quotes in the files.

So we will scan a file for all strings between double quotes.

The problem statement is

- Input: a file, or object like a file.

- Output: list of all strings between double quotes.

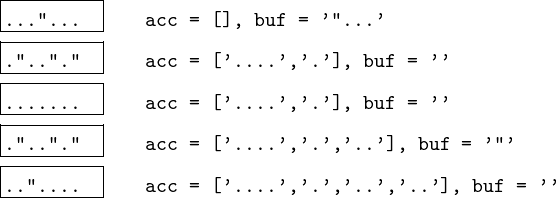

Recall that we read files with fixed size buffer, as illustrated in Fig. 97.

Fig. 97 Reading files with a buffer of fixed size.

For double quoted strings which run across two buffers we need another buffer. So we have to manage two buffers, one for reading strings from file, and another for buffering double quoted string. We will have two functions, one to read buffered data from file, and another to scan the data buffer for double quoted strings.

The code to read strings from file is listed below.

def quoted_strings(file):

"""

Given a file object, this function scans

the file and returns a list of all strings

on the file enclosed between double quotes.

"""

result = []

buffer = ''

while True:

data = file.read(80)

if data == '':

break

(result, buffer) = update_qstrings(result, buffer, data)

return result

We perform a buffered reading of the file.

In acc we store the double quoted strings.

In buf we buffer the double quoted strings.

In Fig. 98, every dot . represents a character.

Fig. 98 Processing strings with two buffers.

In quoted_strings we make the following call:

(result, buffer) = update_qstrings(result, buffer, data)

Code for the function is listed below.

def update_qstrings(acc, buf, data):

"""

acc is a list of double quoted strings,

buf buffers a double quoted string, and

data is the string to be processed.

Returns an updated (acc, buf).

"""

newbuf = buf

for char in data:

if newbuf == '':

if char == '\"':

newbuf = 'o' # 'o' is for 'opened'

else:

if char != '\"':

newbuf += char

else: # do not store 'o'

acc.append(newbuf[1:len(newbuf)])

newbuf = ''

return (acc, newbuf)

The function main() is defined below.

def main():

"""

Prompts the user for a file name and

scans the file for double quoted strings.

"""

print('getting double quoted strings')

name = input('Give a file name : ')

file = open(name, 'r')

strs = quoted_strings(file)

print(strs)

file.close()

Recall the second example application:

list all URLs referred to at http://www.python.org

so we need to scan the web pages for URLs.

def main():

"""

Prompts the user for a web page,

and prints all URLs this page refers to.

"""

print('listing reachable locations')

page = input('Give URL : ')

links = httplinks(page)

print('found %d HTTP links' % len(links))

show_locations(links)

The filtering of double quoted strings and extracting the URLs starts with the function below.

from scanquotes import update_qstrings

def httpfilter(strings):

"""

Returns from the list strings only

those strings which begin with http.

"""

result = []

for name in strings:

if len(name) > 4:

if name[0:4] == 'http':

result.append(name)

return result

In the function httplinks, we first open the URL

and then we read that page in search for double quoted strings.

def httplinks(url):

"""

Given the URL for the web page,

returns the list of all http strings.

"""

from urllib.request import urlopen

try:

print('opening ' + url + ' ...')

page = urlopen(url)

except:

print('opening ' + url + ' failed')

return []

(result, buf) = ([], '')

while True:

try:

data = page.read(80).decode()

except:

print('could not decode data')

break

if data == '':

break

(result, buf) = update_qstrings(result, buf, data)

result = httpfilter(result)

page.close()

return result

An URL consists of 6 parts

protocol://location/path:parameters?query#frag

Given a URL u, the urlparse(u) returns a 6-tuple.

def show_locations(links):

"""

Shows the locations of the URLs in links.

"""

from urllib.parse import urlparse

for url in links:

pieces = urlparse(url)

print(pieces[1])

Web Crawlers¶

Web crawlers make requests recursively. We scang HTML files and browse as follows:

given a URL, open a web page,

compute the list of all URLs in the page,

for all URLs in the list do:

- open the web page defined by location of URL,

- compute the list of all URLs on that page.

then continue recursively, crawling the web.

Some things we have to consider:

- remove duplicates from list of URLs,

- do not turn back to pages visited before,

- limit the levels of recursion,

- some links will not work.

This is very similar to finding a path in a maze, but now we are interested in all intermediate nodes along the path.

The running of the crawler is illustrated below.

$ python webcrawler.py

crawling the web ...

Give URL : http://www.uic.edu

give maximal depth : 2

opening http://www.uic.edu ...

opening http://maps.uic.edu ...

could not decode data

opening http://maps.google.com ...

opening http://maps.googleapis.com ...

opening http://maps.googleapis.com failed

opening http://fimweb.fim.uic.edu ...

.. it takes a while ..

total #locations : 3954

In 2010: #locations : 538

In a modular design of the code for the crawler, we start with

from scanhttplinks import httplinks

We still are left to write: code to manage the list of server locations, and the recursive function to crawl the web.

To retain only new Locations, we filter the list of links with the function defined below.

def new_locations(links, visited):

"""

Given the list links of new URLs and the

list of already visited locations,

returns the list of new locations,

locations not yet visited earlier.

"""

from urllib.parse import urlparse

result = []

for url in links:

parsed = urlparse(url)

loc = parsed[1]

if loc not in visited:

if loc not in visited:

result.append(loc)

return result

Recall that we store only the server locations.

To open a web page we also need to specify the protocol.

We apply urlparse.urlunparse as follows:

>>> from urlparse import urlunparse

>>> urlunparse(('http','www.python.org',

... '','','',''))

'http://www.python.org'

We must provide a 6-tuple as argument.

The function main() is defined below.

def main():

"""

Prompts the user for a web page,

and prints all URLs this page refers to.

"""

print('crawling the web ...')

page = input('Give URL : ')

depth = int(input('give maximal depth : '))

locations = crawler(page, depth, [])

print('reachable locations :', locations)

print('total #locations :', len(locations))

The code for the crawler is provided in the function below.

def crawler(url, k, visited):

"""

Returns the list visited updated with the

list of locations reachable from the

given url using at most k steps.

"""

from urllib.parse import urlunparse

links = httplinks(url)

newlinks = new_locations(links, visited)

result = visited + newlinks

if k == 0:

return result

else:

for loc in newlinks:

url = urlunparse(('http', loc, '', '', '', ''))

result = crawler(url, k-1, result)

return result

Exercises¶

- Write a script to download all

.pyfiles from the course web site. - Limit the search of the crawler so that it only opens

pages within the same domain. For example, if we

start at a location ending with

edu, we only open pages with locations ending withedu. - Adjust

webcrawler.pyto search for a path between two locations. The user is prompted for two URLs. Crawling stops if a path has been found. - Write an iterative version for the web crawler.

- Use the stack in the iterative version of the crawler from the previous exercise to define a tree of all locations that can be reached from a given URL.