Least Squares Chebyshev and Fourier¶

We consider the problem of approximating functions twice: once with a basis of Chebyshev polynomials, and once with a basis of Fourier series.

Approximating Functions with Orthogonal Bases¶

Consider an interval \([a,b]\) and a basis of functions \(\phi_i\), \(i=0,1,2,\ldots\), equipped with an inner product:

for any pair of functions \(f\) and \(g\). If for the basis functions \(\phi_i\) we have

then we say that the basis \(\{ \phi_0, \phi_1, \phi_2, \ldots \}\) is orthogonal.

For any function \(f(x)\) over \([a,b]\), consider the series

Truncate the series to the first \(n+1\) terms:

Because \(c_i = \langle f, \phi_i \rangle\) and \(\phi_i\) is an orthogonal basis:

and therefore \(p\) is the least squares approximation for \(f\).

Chebyshev Approximations¶

From the definition of the Chebyshev polynomial it is not immediately obvious that \(T_n(x)\) is a polynomial. Chebyshev polynomials can be computed via the recursion:

Now we can verify that for the base cases of the recursion, we obtain polynomials: \(T_0(x) = \cos(0) = 1\), \(T_1(x) = \cos( \arccos(x)) = x\).

For general degree \(n\), consider the identities:

Apply the above identity to \(a = n \arccos(x)\), \(b = \arccos(x)\).

Any polynomial can be written in the Chebyshev polynomial basis. Consider \(T_{n+1}(x) = 2x T_n(x) - T_{n-1}(x)\), \(T_0(x) = 1\), \(T_1(x) = x\). Writing out the first six polynomials, in expanded form:

and rearranging in matrix-vector notation gives:

Observe that the lower triangular matrix is invertible. Using the inverse of the above matrix, any polynomial of degree 5 or less can can be written as a linear combination of Chebyshev polynomials. This shows that we have a basis of polynomials.

The Chebyshev basis is orthogonal with respect to the inner product

For the Chebyshev polynomials \(T_i\), we have

Therefore, the Chebyshev polynomials form an orthogonal basis.

We can approximate any function \(f\) with a Chebyshev series:

for \(i=0,1,\ldots,n\), where

is the truncation of the Chebyshev series.

By construction: \(\langle f - p, T_i \rangle = 0\), \(i=0,1,\ldots,n\). The polynomial \(p\) is a least squares approximation for \(f\).

The Julia function below defines an array of basis functions.

"""

function basis(n::Int64)

returns an array of functions with the first n

Chebyshev polynomials.

"""

function basis(n::Int64)

t0(x) = 1

result = [t0]

if(n > 1)

t1(x) = x

result = [result; t1]

t1two(x) = 2*x

for i=3:n

newt(x) = t1two(x)*result[i-1](x) - result[i-2](x)

result = [result; newt]

end

end

return result

end

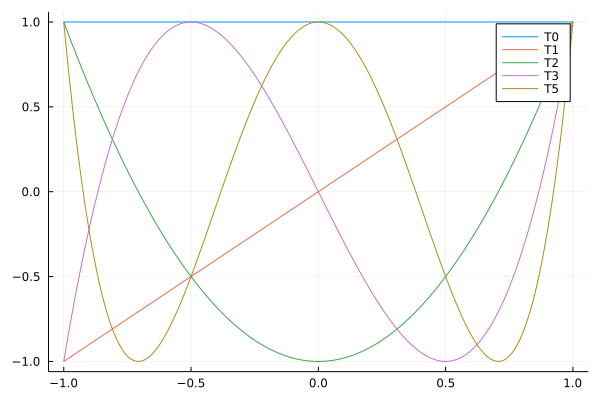

The function basis is then used to make the plot

in Fig. 50.

Fig. 50 The first five Chebyshev polynomials.¶

We compute the inner product via Gauss-Chebyshev quadrature:

as defined in the function innerproduct below:

using FastGaussQuadrature

nodes, weights = gausschebyshev(16)

rule(f) = sum([weights[k]*f(nodes[k]) for k=1:length(weights)])

"""

function innerproduct(f::Function, g::Function)

returns the value of the inner product of the functions f and g,

by the integral of f and g over [-1,+1] with weight 1/sqrt(1-x^2).

"""

function innerproduct(f::Function, g::Function)

fun(x) = f(x)*g(x)

return (2.0/pi)*rule(fun)

end

Verifying the orthogonality of the basis, all inner products for the first five basis functions \(T_i\), \(i=0,1,2,3,4\), at row \(i\) and column \(j\) is the value of \(\langle T_i, T_j \rangle\):

+2.00e+00 +1.06e-16 -5.30e-17 +1.06e-16 -7.07e-17

+1.06e-16 +1.00e+00 +1.59e-16 -8.83e-17 +2.65e-16

-5.30e-17 +1.59e-16 +1.00e+00 +3.00e-16 -2.12e-16

+1.06e-16 -8.83e-17 +3.00e-16 +1.00e+00 +3.71e-16

-7.07e-17 +2.65e-16 -2.12e-16 +3.71e-16 +1.00e+00

We observe the identity matrix (modulo roundoff of machine precision), except for the first number in the first row and column, which equals 2.

The coefficients of the Chebyshev approximant are computed

with respect to the basis.

The Julia function coefficients

computes \(\langle f, T \rangle\)

for all \(T\) in the basis.

"""

function coefficients(f::Function,b::Array{Function,1})

returns the inner products of f with the basis functions in b.

"""

function coefficients(f::Function,b::Array{Function,1})

dim = length(b)

result = zeros(dim)

for i=1:dim

result[i] = innerproduct(f,b[i])

end

return result

end

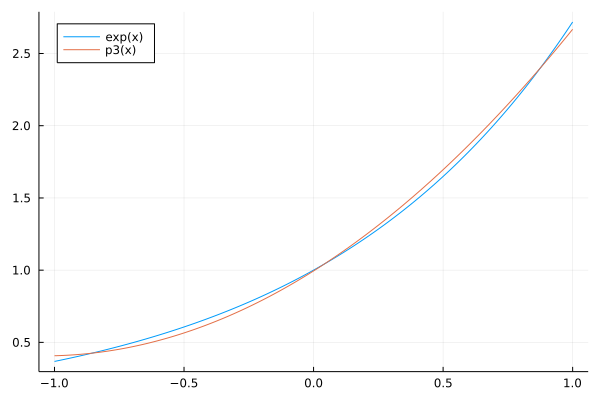

As an illustration, we compute a cubic approximation for \(\exp\):

The cubic least squares approximant for \(\exp(x)\) is shown in Fig. 51.

Fig. 51 A Chebyshev approximation for \(exp(x)\) of degree 3.¶

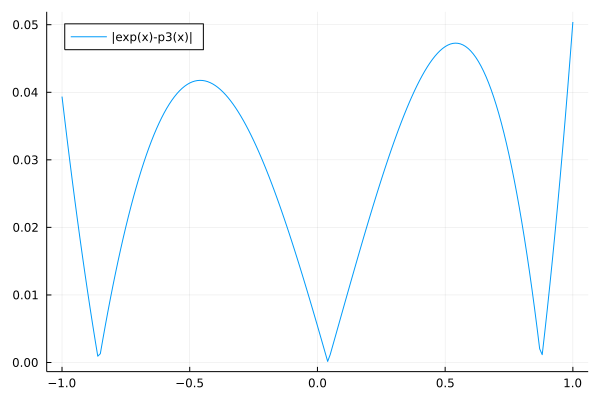

The error of the cubic least squares approximant is shown in Fig. 52.

Fig. 52 The error of the cubic least squares approximant of \(exp(x)\).¶

Fitting Functions with Fourier Series¶

Many functions are periodic and to fit those functions, we use an orthogonal trigonometric basis. Starting with the constant function 1, we consider sine and cosine functions with increasing frequency, respectively \(\sin(k \pi t)\) and \(\cos(k \pi t)\), for \(k = 1,2,\ldots,n\). With respect to the inner product

the functions in the basis are orthogonal to each other. For any \(k\) and \(\ell\): \(\langle \sin(k \pi t), \cos(\ell \pi t) \rangle = 0\) and

The same holds for the cosine functions.

The least squares approximation for any function \(f(t)\) for \(t \in [-1,+1]\) is then

where the coefficients are computed as

Because of the orthogonal basis \(f-p\) is orthogonal to the basis and \(p\) is the least squares approximation for \(f\).

For the interval \([0,1]\), instead of \([-1,+1]\), consider the basis functions \(\cos(k 2 \pi t)\) and \(\sin(k 2 \pi t)\), \(k=1,2,\ldots\)

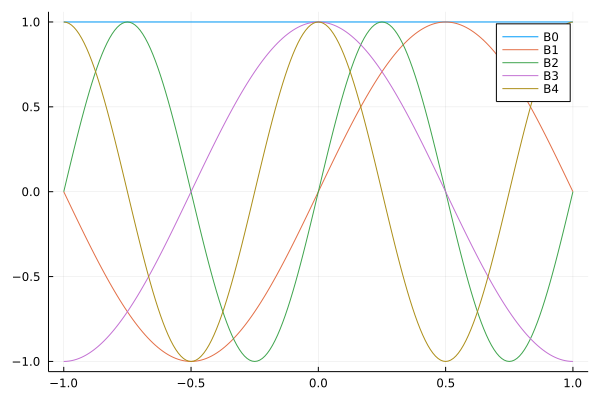

Fig. 53 The first five functions in the Fourier basis.¶

The first five basis functions shown in Fig. 53 uses the Julia function to define an array of basis functions. The basis functions are 1, \(\cos(k \pi t)\), and \(\sin(k \pi t)\), for \(k = 1,2,\ldots,n\).

"""

function basis(n::Int64)

returns an array of functions with

the first 2*n+1 basis polynomials.

"""

function basis(n::Int64)

one = [(t) -> 1]

sines = [(t) -> sin(k*pi*t) for k=1:n]

cosines = [(t) -> cos(k*pi*t) for k=1:n]

result = [one; sines; cosines]

return result

end

The inner product is computed via Gauss-Legendre quadrature:

and with the Julia code below:

using FastGaussQuadrature

nodes, weights = gausslegendre(32)

rule(f) = sum([weights[k]*f(nodes[k]) for k=1:length(weights)])

"""

function innerproduct(f::Function, g::Function)

returns the value of the inner product of the functions f and g,

by the integral of f and g over [-1,+1].

"""

function innerproduct(f::Function, g::Function)

fun(x) = f(x)*g(x)

return rule(fun)

end

To verify the orthogonality of the basis, we compute all inner products for the first five basis functions \(B_i\), \(i=0,1,2,3,4\), at row \(i\) and column \(j\) is the value of \(\langle B_i, B_j \rangle\):

+2.00e+00 +6.26e-17 +5.57e-17 +4.46e-16 -1.04e-16

+6.26e-17 +1.00e+00 +1.93e-16 +2.79e-17 -1.78e-17

+5.57e-17 +1.93e-16 +1.00e+00 -3.37e-17 -5.71e-18

+4.46e-16 +2.79e-17 -3.37e-17 +1.00e+00 +2.93e-16

-1.04e-16 -1.78e-17 -5.71e-18 +2.93e-16 +1.00e+00

We observe the identity matrix (modulo roundoff of machine precision), except for the first number in the first row and column, which equals 2.

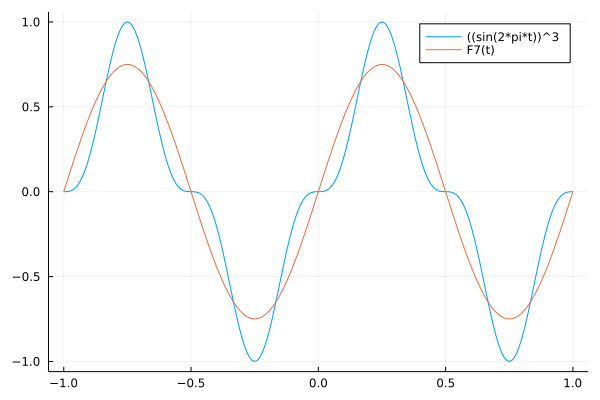

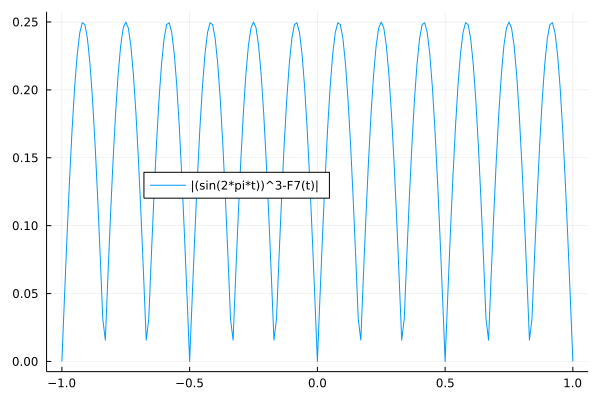

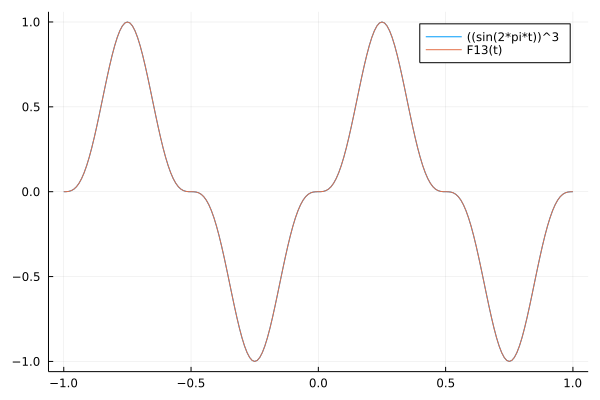

As an example of fitting a periodic function, consider \(f(t) = (\sin(2 \pi t))^3\), for \(t \in [-1,+1]\). We compute the coefficients

of

for

\(n = 3\) (7 basis functions), shown in Fig. 54 (with error in Fig. 55) and

\(n = 6\) (13 basis functions), shown in Fig. 56.

Fig. 54 The approximation with 7 basis functions.¶

Fig. 55 The error of the approximation with 7 basis functions.¶

Fig. 56 The approximation with 13 basis functions.¶

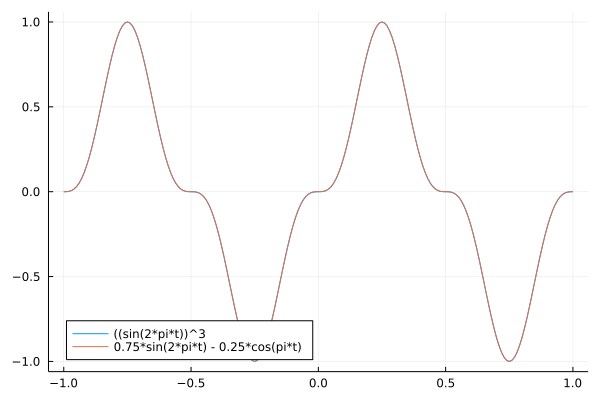

Looking at the Fourier series representation, for \(n = 6\) (13 basis functions), only two coefficients are nonzero:

\(0.75\) at index 3, and

\(-0.25\) at index 7.

These two indices respectively correspond to \(\sin(2 \pi t)\) and \(\cos(\pi t)\). Therefore, we can write

This symbolic result is shown in Fig. 57.

Fig. 57 A symbolic result of a Fourier approximation.¶

Proposals of Project Topics¶

Fit average daily temperatures.

Obtain the average temperature for each day of one year. Fit the data with a trigonometric basis.

Consider the following questions:

How large should the basis be for an accurate representation?

Compare the fit of one year on data of another year.

How good does the fit predict the temperature of the other year?

On the origin of the Chebyshev polynomials

How did Chebyshev arrive at his polynomials? Consider the paper by V. L. Goncharov: The Theory of Best Approximation of Functions. Journal of Approximation Theory 106:2–57, 2000.

The second section of this paper describes the memoir Theorie des mecanismes connus sous le nom de parallelogrammes, by Chebyshev, which appeared in 1854.

Consider the following questions:

Verify the reduction of computing a best approximating polynomial to a differential equation.

Illustrate your verification with some computational experiments.

Exercises¶

Consider the approximation of \(\exp(x)\) by Chebyshev polynomials.

How large should the degree of the approximant be for the largest error to be less than \(10^{-6}\) over \([-1,+1]\)?

Consider \(f(x) = 4 \sin(2 \pi x) + 3 \sin(5 \pi x)\) over \([-1,+1]\).

Compute a Chebyshev approximation with a high enough degree so the error is below \(10^{-6}\) for all \(x \in [-1,+1]\).

Consider \(f(x) = 4 \sin(2 \pi x) + 3 \sin(5 \pi x)\) over \([-1,+1]\).

How many terms in the trigonometric basis are needed to represent \(f\)? Verify this numerically.

Compare with the outcome of Exercise 2. Which method, Chebyshev polynomials or Fourier series, is preferred for this \(f\).

Consider Fourier series approximations for \(\exp(x)\) over \([-1,+1]\).

How many terms in the Fourier series are needed for the largest error in the approximation to be less than \(10^{-6}\)?

Compare with the outcome of Exercise 1. Which method, Chebyshev polynomials or Fourier series, is preferred for \(\exp(x)\)?