A Massively Parallel Processor: the GPU¶

Introduction to General Purpose GPUs¶

Thanks to the industrial success of video game development graphics processors became faster than general CPUs. General Purpose Graphic Processing Units (GPGPUs) are available, capable of double floating point calculations. Accelerations by a factor of 10 with one GPGPU are not uncommon. Comparing electric power consumption is advantageous for GPGPUs.

Thanks to the popularity of the PC market, millions of GPUs are available – every PC has a GPU. This is the first time that massively parallel computing is feasible with a mass-market product. Applications such as magnetic resonance imaging (MRI) use some combination of PC and special hardware accelerators.

In five weeks, we plan to cover the following topics:

architecture, programming models, scalable GPUs

introduction to CUDA and data parallelism

CUDA thread organization, synchronization

CUDA memories, reducing memory traffic

coalescing and applications of GPU computing

The lecture notes follow the book by David B. Kirk and Wen-mei W. Hwu: Programming Massively Parallel Processors. A Hands-on Approach. Elsevier 2010; fourth edition, 2023, with Izzat El Hajj as third author.

What are the expected learning outcomes from the part of the course?

We will study the design of massively parallel algorithms.

We will understand the architecture of GPUs and the programming models to accelerate code with GPUs.

We will use software libraries to accelerate applications.

The key questions we address are the following:

Which problems may benefit from GPU acceleration?

Rely on existing software or develop own code?

How to mix MPI, multicore, and GPU?

The textbook authors use the peach metaphor: much of the application code will remain sequential; but GPUs can dramatically improve easy to parallelize code.

Our Microway workstation pascal (acquired in 2016)

has an NVIDIA GPU with the CUDA software development installed.

NIVDIA P100 general purpose graphics processing unit

number of CUDA cores: 3,584 (56 \(\times\) 64)

frequency of CUDA cores: 405MHz

double precision floating point performance: 4.7 Tflops (peak)

single precision floating point performance: 9.3 Tflops (peak)

total global memory: 16275 MBytes

CUDA programming model with

nvcccompiler.

To compare the theoretical peak performance of the P100, consider the theoretical peak performance of the two Intel E5-2699v4 (2.2GHz 22 cores) CPUs in the workstation:

2.20 GHz \(\times\) 8 flops/cycle = 17.6 GFlops/core;

44 core \(\times\) 17.6 GFlops/core = 774.4 GFlops.

\(\Rightarrow\) 4700/774.4 = 6.07. One P100 is as strong as 6 \(\times\) 44 = 264 cores.

CUDA stands for Compute Unified Device Architecture, is a general purpose parallel computing architecture introduced by NVIDIA.

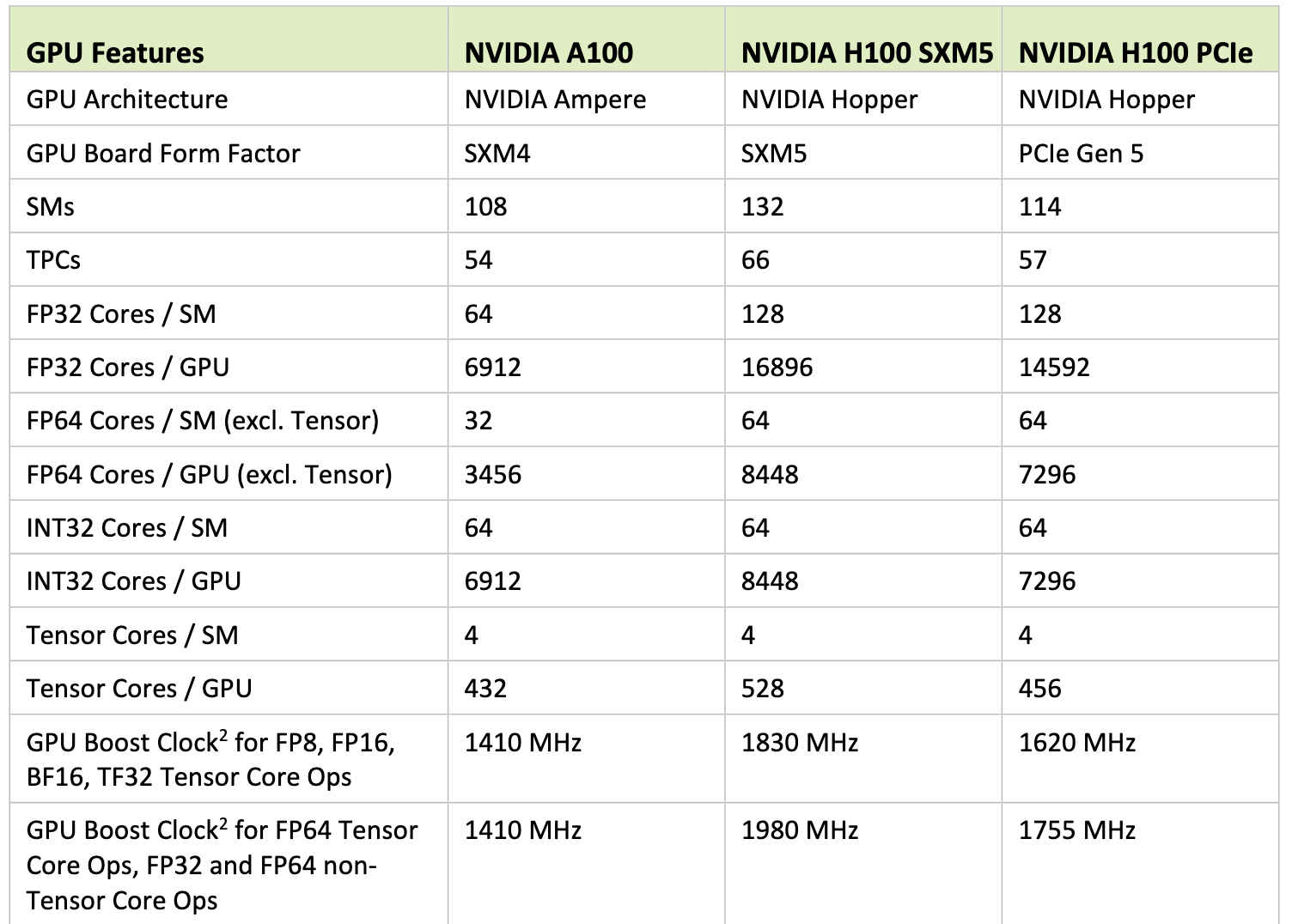

Consider the comparison of the specifications of three consecutive NVIDIA GPUs:

NVIDIA P100 16GB

PascalAccelerator3,586 CUDA cores, 3,586 = 56 SM \(\times\) 64 cores/SM

GPU max clock rate: 1329 MHz (1.33 GHz)

16GB Memory at 720GB/sec peak bandwidth

peak performance: 4.7 TFLOPS double precision

NVIDIA V100

VoltaAccelerator5,120 CUDA cores, 5,120 = 80 SM \(\times\) 64 cores/SM

GPU max clock rate: 1912 MHz (1.91 GHz)

Memory clock rate: 850 Mhz

32GB Memory at 870GB/sec peak bandwidth

peak performance: 7.9 TFLOPS double precision

NVIDIA A100

AmpereAccelerator6,912 CUDA cores, 6,912 = 108 SM \(\times\) 64 cores/SM

GPU max clock rate: 1410 MHz (1.41 GHz)

Memory clock rate: 1512 Mhz

80GB Memory at 1.94TB/sec peak bandwidth

peak performance: 9.7 TFLOPS double precision

peak FP64 Tensor Core performance: 19.5 TFLOPS

Tensor cores were already present in the P100 and V100, but those earlier tensor core were not capable of double precision arithmetic.

The full specifications are described in whitepapers available at the web site of NVIDIA:

NVIDIA GeForce GTX 680, 2012.

NVIDIA Tesla P100, 2016.

NVIDIA Tesla V100 GPU Architecture, August 2017.

NVIDIA A100 Tensor Core GPU Architecture, 2020.

NVIDIA Ampere GA102 GPU Architecture, 2021.

NVIDIA H100 Tensor Core GPU Architecture, 2022.

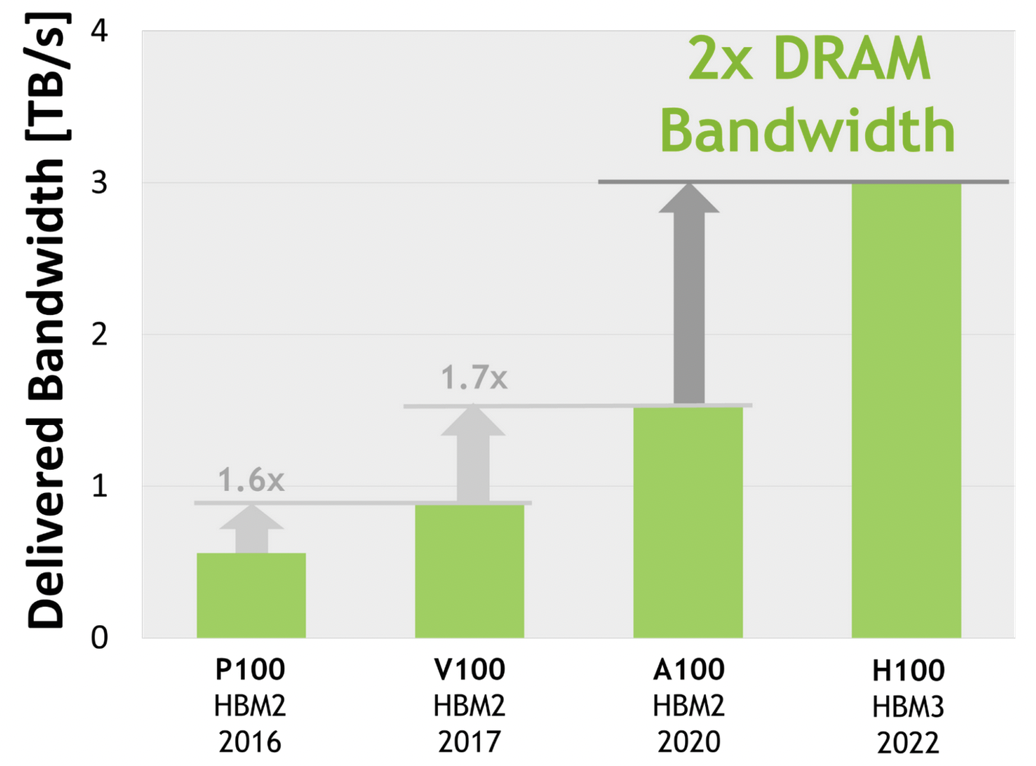

The evolution of bandwidth is shown in Fig. 47.

Fig. 47 Evolution of the bandwidth, from the NVIDIA developer documentation.¶

The evolution of core counts is shown in Fig. 48.

Fig. 48 Evolution of the core counts, from the NVIDIA developer documentation.¶

The evolution of the non-Tensor peak performance for various GPUs is as follows: Pascal: 4.7, Volta: 7.9, Ampere: 9.7, Hopper: 25.6 TFLOPS.

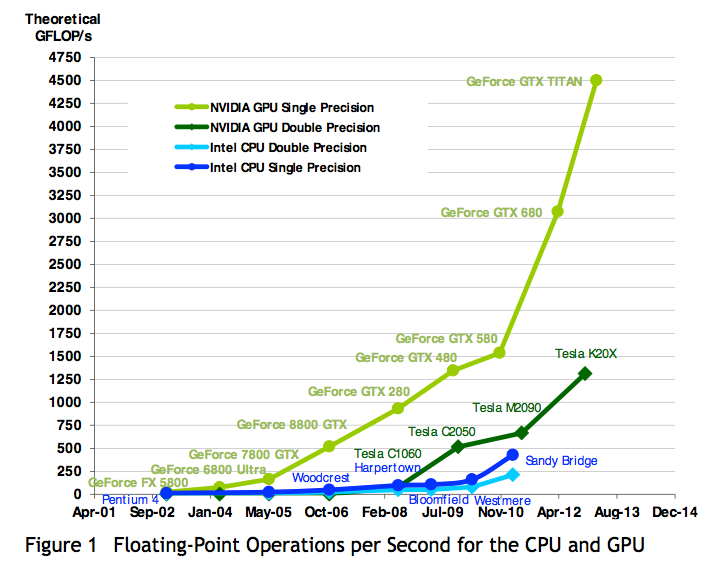

Graphics Processors as Parallel Computers¶

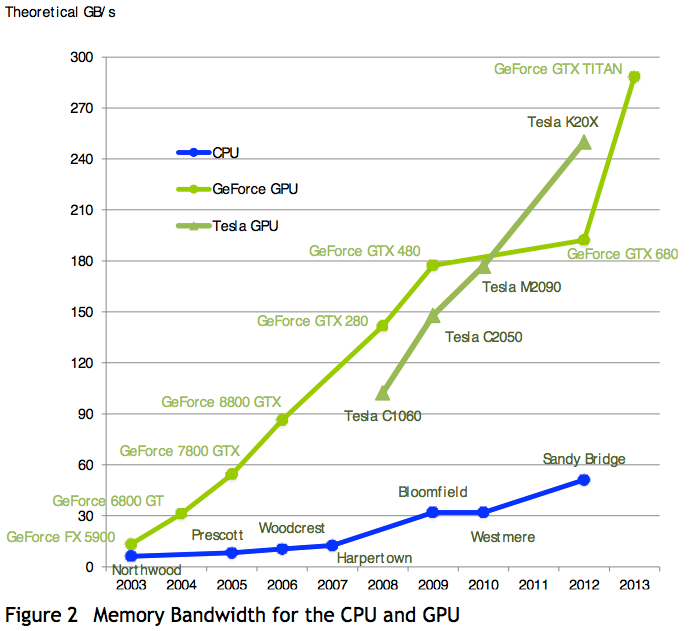

In this section we compare the performance between GPUs and CPU, explaining the difference between their architectures. The performance gap between GPUs and CPUs is illustrated by two figures, taken from the NVIDIA CUDA programming guide. We compare the flops in Fig. 49 and the memory bandwidth in Fig. 50.

Fig. 49 Flops comparison taken from the NVIDIA programming guide.¶

Fig. 50 Bandwidth comparision taken from the NVIDIA programming guide.¶

Memory bandwidth is the rate at which data can be read from/stored into

memory, expressed in bytes per second.

Graphics chips operate at approximately 10 times

the memory bandwidth of CPUs.

For our Microway station pascal, the memory bandwidth of the CPU

is 76.8GB/s, whereas the NVIDIA P100 has 720GB/s as peak bandwidth.

Straightforward parallel implementations on GPGPUs often achieve

directly a speedup of 10, saturating the memory bandwidth.

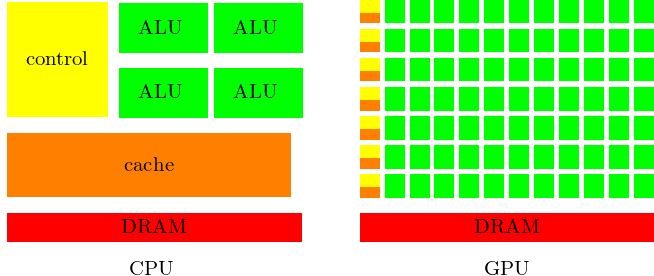

The main distinction between the CPU and GPU design is as follows:

CPU: multicore processors have large cores and large caches using control for optimal serial performance.

GPU: optimizing execution throughput of massive number of threads with small caches and minimized control units.

The distinction is illustrated in Fig. 51.

Fig. 51 Distinction between the design of a CPU and a GPU.¶

The architecture of a modern GPU is summarized in the following items:

A CUDA-capable GPU is organized into an array of highly threaded Streaming Multiprocessors (SMs).

Each SM has a number of Streaming Processors (SPs) that share control logic and an instruction cache.

Global memory of a GPU consists of multiple gigabytes of Graphic Double Data Rate (GDDR) DRAM.

Higher bandwidth makes up for longer latency.

The growing size of global memory allows to keep data longer in global memory, with only occasional transfers to the CPU.

A good application runs 10,000 threads simultaneously.

A concrete example of the GPU architecture is in Fig. 52.

Fig. 52 A concrete example of the GPU architecture.¶

Streaming multiprocessors support up to 2,048 threads. The multiprocessor creates, manages, schedules, and executes threads in groups of 32 parallel threads called warps. Unlike CPU cores, threads are executed in order and there is no branch prediction, although instructions are pipelined.

According to David Kirk and Wen-mei Hwu (page 14): Developers who are experienced with MPI and OpenMP will find CUDA easy to learn. CUDA (Compute Unified Device Architecture) is a programming model that focuses on data parallelism.

Data parallelism involves

huge amounts of data on which

the arithmetical operations are applied in parallel.

With MPI we applied the SPMD (Single Program Multiple Data) model. With GPGPU, the architecture is SIMT = Single Instruction Multiple Thread. An example with large amount of data parallelism is matrix-matrix multiplication in large dimensions. Available Software Development Tools (SDK), e.g.: BLAS, FFT are available for download at <http://www.nvidia.com>.

Alternatives to CUDA are

OpenCL (chapter 14) for heterogeneous computing;

OpenACC (chapter 15) uses directives like OpenMP;

C++ Accelerated Massive Parallelism (chapter 18).

Extensions to CUDA are

Thrust: productivity-oriented library for CUDA (chapter~16);

CUDA FORTRAN (chapter 17);

MPI/CUDA (chapter 19).

And then, of course, there is Julia, which provides packages for vendor agnostic GPU computing.

Bibliography¶

NVIDIA CUDA Programming Guide. Available at <http://developer.nvdia.com>.

Victor W. Lee et al: Debunking the 100X GPU vs. CPU Myth: An Evaluation of Throughput Computing on CPU and GPU. In Proceedings of the 37th annual International Symposium on Computer Architecture (ISCA’10), ACM 2010.

W.W. Hwu (editor). GPU Computing Gems: Emerald Edition. Morgan Kaufmann, 2011.

Exercises¶

How strong is the graphics card in your computer?