The Crew of Threads Model¶

We illustrate the use of pthreads to implement the work crew model, working to process a sequence of jobs, given in a queue.

Multithreaded Processes¶

Before we start programming programming shared memory parallel computers, let us specify the relation between threads and processes.

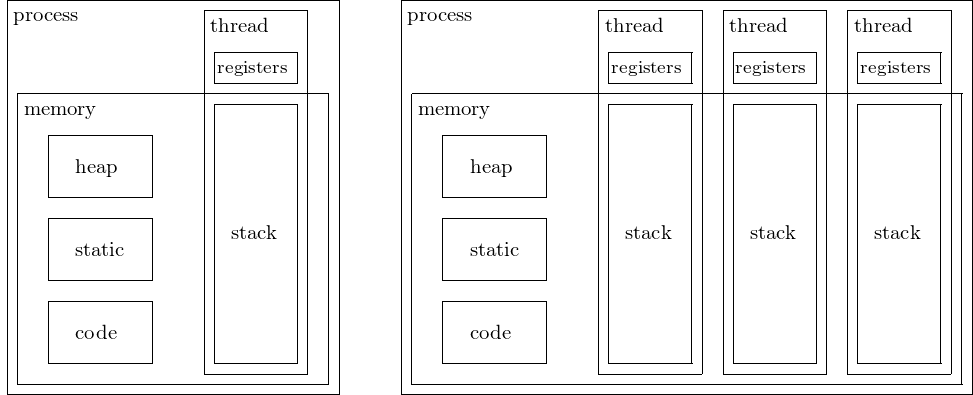

A thread is a single sequential flow within a process. Multiple threads within one process share heap storage, static storage, and code. Each thread has its own registers and stack. Threads share the same single address space and synchronization is needed when threads access same memory locations. A single threaded process is depicted in Fig. 31 next to a multithreaded process.

Fig. 31 At the left we see a process with one single thread and at the right a multithreaded process.¶

Threads share the same single address space and synchronization is needed when threads access same memory locations. Multiple threads within one process share heap storage, for dynamic allocation and deallocation; static storage, fixed space; and code. Each thread has its own registers and stack.

The difference between the stack and the heap:

stack: Memory is allocated by reserving a block of fixed size on top of the stack. Deallocation is adjusting the pointer to the top.

heap: Memory can be allocated at any time and of any size.

Every call to calloc (or malloc) and the deallocation

with free involves the heap.

Memory allocation or deallocation should typically happen

respectively before or after the running of multiple threads.

In a multithreaded process, the memory allocation and deallocation

should otherwise occur in a critical section.

Code is thread safe if its simultaneous execution by

multiple threads is correct.

The Work Crew Model¶

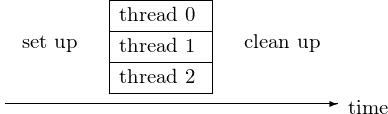

Instead of the manager/worker model where one node is responsible for the distribution of the jobs and the other nodes are workers, with threads we can apply a more collaborative model. We call this the work crew model. Fig. 32 illustrates a computation performed by three threads in a work crew model.

Fig. 32 A computation performed by 3 threads in a work crew model.¶

If the computation is divided into many jobs stored in a queue, then the threads grab the next job, compute the job, and push the result onto another queue or data structure.

We will simulate a work crew model:

Suppose we have a queue of \(n\) jobs.

Each job has a certain work load (computational cost).

There are \(p\) threads working on the \(n\) jobs.

To distribute the jobs among the threads, we can choose between the following:

either each worker has its own queue of jobs,

or idle workers do the next jobs in the shared queue.

We consider the second type of distributing jobs, which corresponds to dynamic load balancing, because which job gets executed by which thread is determined during execution.

A Crew of Workers with Julia¶

The output of a simulation with Julia is below.

$ julia -t 3 workcrew.jl

The jobs : [4, 5, 5, 2, 4, 6, 3, 6, 6, 4]

the number of threads : 3

Worker 1 is ready.

Worker 3 is ready.

Worker 2 is ready.

Worker 3 spends 5 seconds on job 2 ...

Worker 1 spends 4 seconds on job 1 ...

Worker 2 spends 5 seconds on job 3 ...

Worker 1 spends 2 seconds on job 4 ...

Worker 3 spends 6 seconds on job 6 ...

Worker 2 spends 4 seconds on job 5 ...

Worker 1 spends 3 seconds on job 7 ...

Worker 2 spends 6 seconds on job 8 ...

Worker 1 spends 6 seconds on job 9 ...

Worker 3 spends 4 seconds on job 10 ...

Jobs done : [1, 3, 2, 1, 2, 3, 1, 2, 1, 3]

The setup starts with generating a queue of jobs, done by the code below.

using Base.Threads

nbr = 10

jobs = rand((2, 3, 4, 5, 6), nbr)

println("The jobs : ", jobs)

nt = nthreads()

println("the number of threads : ", nt)

@threads for i=1:nt

println("Worker ", threadid(), " is ready.")

end

The queue of jobs is shared between all threads. The value of the index to the next job is also shared. The next idle worker will take this value and update it. For the correctness of the program, it is critical that during the update of this value, no other thread accesses the value. Julia provides the mechanism of the atomic variable, which will be illustrated next.

jobidx = Atomic{Int}(1)

@threads for i=1:nt

println("Worker ", threadid(), " is ready.")

while true

myjob = atomic_add!(jobidx, 1)

if myjob > nbr

break

end

println("Thread ", threadid(),

" spends ", jobs[myjob], " seconds",

" on job ", myjob, " ...")

sleep(jobs[myjob])

jobs[myjob] = threadid()

end

end

println("Jobs done : ", jobs)

The job index is accessed in a thread safe manner using an atomic variable, and used as follows:

The job index is declared and initialized to one:

jobidx = Atomic{Int}(1).Incrementing the job index goes via

myjob = atomic_add!(jobidx, 1), which returns the current value ofjobidxand increments the value ofjobidxby one.

The thread safe manner means that accessing the value of the job index can done by only one thread at the same time.

Processing a Job Queue¶

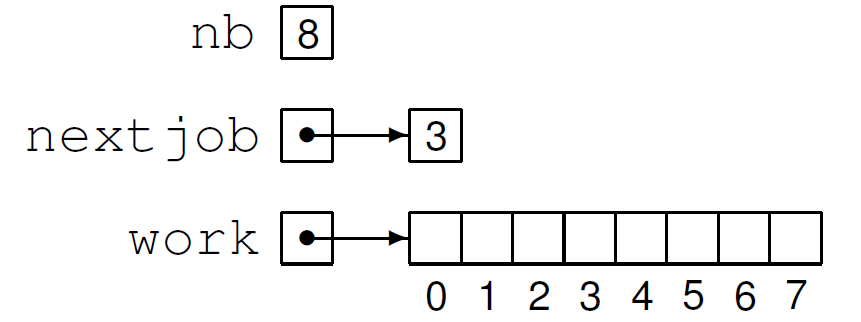

To define the simulation more precisely, consider that the state of the job queue is defined by

the number of jobs,

the index to the next job to be executed, and

the work to be done by every job.

Variables in a program can be values

or references to values, as illustrated

in Fig. 33, for a queue of 8 jobs.

The current status of the queue is determined

by the value of nextjob, the index to the next job.

Fig. 33 Representing a job queue by the number of jobs, the next job and the work for each job.¶

In C, the above picture is realized by the statements:

int nb = 8;

int *nextjob;

int *work;

*nextjob = 3;

work = (int*)calloc(nb, sizeof(int));

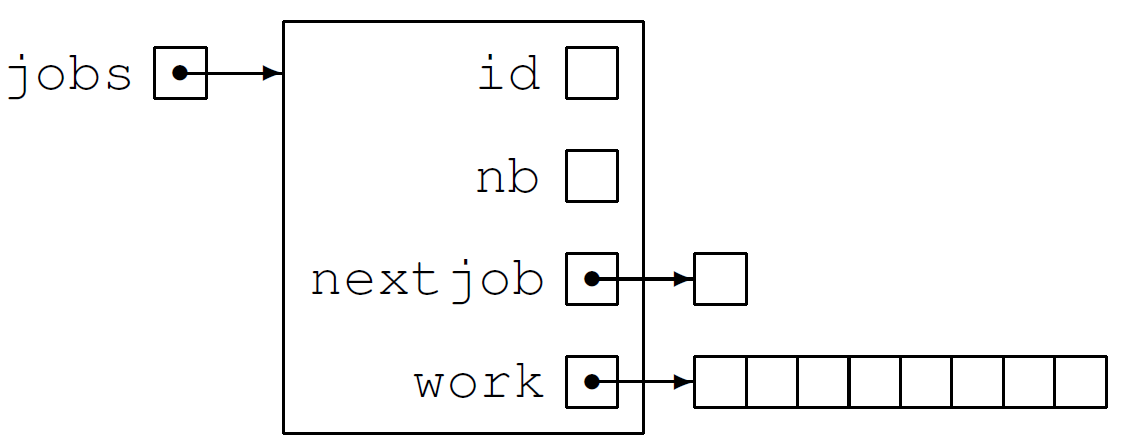

To define the sharing of data between threads, we encapsulate the references. Every thread has as values

its thread identification number

id; andthe number of jobs

nb.

The shared data are

the reference to the next job; and

the cost for every job.

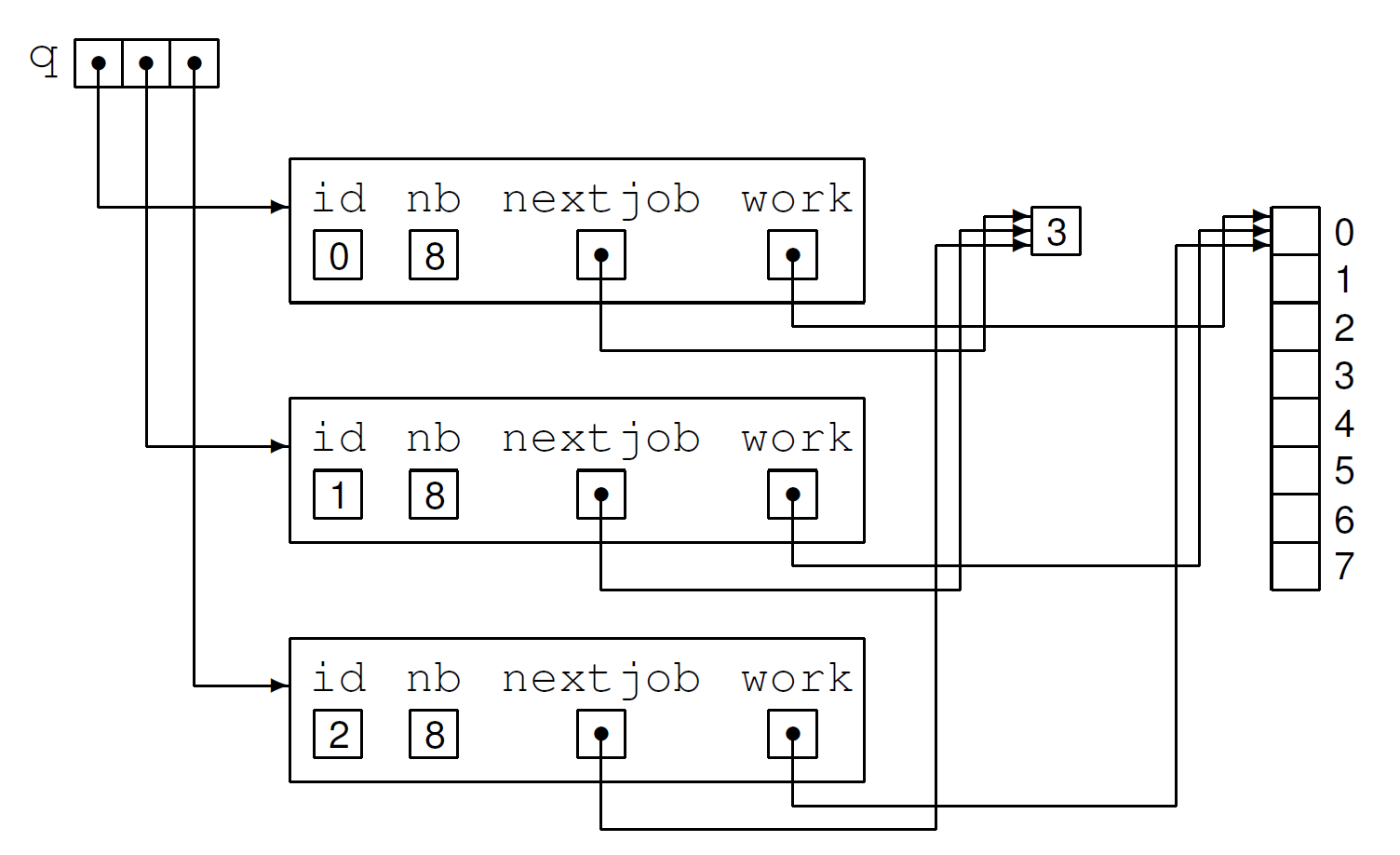

The use of values and pointers is illustrated in Fig. 34.

Fig. 34 Each thread has as values its identification number

and the number of jobs. The shared values are in

the memory locations referred to by the pointers

nextjob and work.¶

The definition of the data structure in C is shown below.

typedef struct

{

int id; /* identification label */

int nb; /* number of jobs */

int *nextjob; /* index of next job */

int *work; /* array of nb jobs */

} jobqueue;

For example, to share the data of the job queue between 8 threads, consider Fig. 35.

Fig. 35 A job queue q to distribute 8 jobs among 3 threads.¶

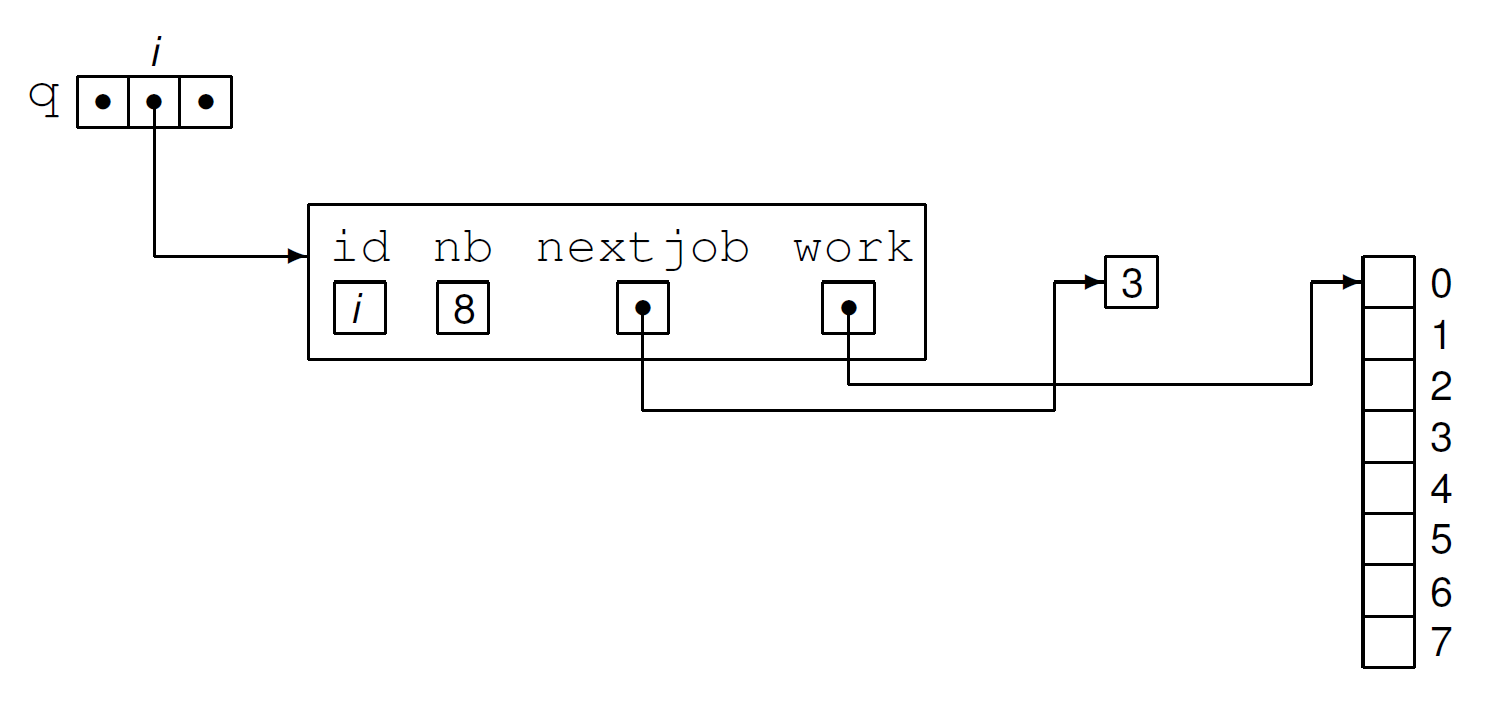

Thread \(i\) takes on input \(\mbox{\tt q[}i\mbox{\tt ]}\):

\(\mbox{\tt q[}i\mbox{\tt ].id} = i\),

\(\mbox{\tt q[}i\mbox{\tt ].nb} = 8\),

\(\mbox{\tt *q[}i\mbox{\tt ].nextjob} = 3\),

\(\mbox{\tt q[}i\mbox{\tt ].work[}3\mbox{\tt ]}\) defines the next job.

The situation for \(i=2\) on the example with 3 threads is illustrated in Fig. 36.

Fig. 36 A job queue q accessed by thread \(i=2\).¶

The sequential C code to process the job queue is listed below:

void do_job ( jobqueue *q )

{

int jobtodo;

do

{

jobtodo = -1;

int *j = q->nextjob;

if(*j < q->nb) jobtodo = (*j)++;

if(jobtodo == -1) break;

int w = q->work[jobtodo];

sleep(w);

}

while (jobtodo != -1);

}

The q->nextjob is equivalent to (*q).nextjob.

The jobtodo = (*j)++ dereferences j, assigns, and increments.

Processing the Jobs with OpenMP¶

With OpenMP, we take the sequential code and define parallel regions. Observe the critical section in the code below.

void do_job ( jobqueue *q )

{

int jobtodo;

do

{

jobtodo = -1;

int *j = q->nextjob;

#pragma omp critical

if(*j < q->nb) jobtodo = (*j)++;

if(jobtodo == -1) break;

int w = q->work[jobtodo];

sleep(w);

}

while (jobtodo != -1);

}

The do_job function is called

in the function below, which defines the parallel region.

int process_jobqueue ( jobqueue *jobs, int nbt )

{

jobqueue q[nbt];

int i;

for(i=0; i<nbt; i++)

{

q[i].nb = jobs->nb;

q[i].id = i;

q[i].nextjob = jobs->nextjob;

q[i].work = jobs->work;

}

#pragma omp parallel

{

i = omp_get_thread_num();

do_job(&q[i]);

}

return *(jobs->nextjob);

}

And then finally, we define the main program.

int main ( int argc, char* argv[] )

{

int njobs,done,nbthreads;

jobqueue *jobs;

/* prompt for njobs and nbthreads */

jobs = make_jobqueue(njobs);

omp_set_num_threads(nbthreads);

done = process_jobqueue(jobs,nbthreads);

printf("done %d jobs\n", jobs->nb);

return 0;

}

This detailed definition of the job queue works as well with Pthreads, as explained in the next section.

The POSIX Threads Programming Interface¶

For UNIX systems,

a standardized C language threads programming interface

has been specified by the IEEE POSIX 1003.1c standard.

POSIX stands for Portable Operating System Interface.

Implementations of this POSIX threads programming interface

are referred to as POSIX threads, or Pthreads.

We can see that gcc supports posix threads when

we ask for its version number:

$ gcc -v

... output omitted ...

Thread model: posix

... output omitted ...

In a C program we just insert

#include <pthread.h>

and compilation may require the switch -pthread

$ gcc -pthread program.c

Our first program with Pthreads is once again a hello world. We define the function each thread executes:

#include <stdio.h>

#include <stdlib.h>

#include <pthread.h>

void *say_hi ( void *args );

/*

* Every thread executes say_hi.

* The argument contains the thread id. */

int main ( int argc, char* argv[] ) { ... }

void *say_hi ( void *args )

{

int *i = (int*) args;

printf("hello world from thread %d!\n",*i);

return NULL;

}

Typing gcc -o /tmp/hello_pthreads hello_pthreads.c

at the command prompt compiles the program and execution

goes as follows:

$ /tmp/hello_pthreads

How many threads ? 5

creating 5 threads ...

waiting for threads to return ...

hello world from thread 0!

hello world from thread 2!

hello world from thread 3!

hello world from thread 1!

hello world from thread 4!

$

Below is the main program:

int main ( int argc, char* argv[] )

{

printf("How many threads ? ");

int n; scanf("%d",&n);

{

pthread_t t[n];

pthread_attr_t a;

int i,id[n];

printf("creating %d threads ...\n",n);

for(i=0; i<n; i++)

{

id[i] = i;

pthread_attr_init(&a);

pthread_create(&t[i],&a,say_hi,(void*)&id[i]);

}

printf("waiting for threads to return ...\n");

for(i=0; i<n; i++) pthread_join(t[i],NULL);

}

return 0;

}

In order to avoid sharing data between threads,

To each thread we pass its unique identification label.

To say_hi we pass the address of the label.

With the array id[n] we have n distinct addresses:

pthread_t t[n];

pthread_attr_t a;

int i,id[n];

for(i=0; i<n; i++)

{

id[i] = i;

pthread_attr_init(&a);

pthread_create(&t[i],&a,say_hi,(void*)&id[i]);

}

Passing &i instead of &id[i] gives to every thread

the same address, and thus the same identification label.

We can summarize the use of Pthreads in 3 steps:

Declare threads of type

pthread_tand attribute(s) of typepthread_attri_t.Initialize the attribute

aaspthread_attr_init(&a);and create the threads withpthreads_create providingthe address of each thread,

the address of an attribute,

the function each thread executes, and

an address with arguments for the function.

Variables are shared between threads if the same address is passed as argument to the function the thread executes.

The creating thread waits for all threads to finish using

pthread_join.

To process a queue of jobs,

we will simulate a work crew model with Pthreads.

Suppose we have a queue with n jobs.

Each job has a certain work load (computational cost).

There are t threads working on the n jobs.

A variable nextjob is an index to the next job.

In a critical section, each thread

reads the current value of nextjob and

increments the value of nextjob with one.

The job queue is defined as a structure of constant values and pointers, which allows threads to share data.

typedef struct

{

int id; /* identification label */

int nb; /* number of jobs */

int *nextjob; /* index of next job */

int *work; /* array of nb jobs */

} jobqueue;

Every thread gets a job queue with two constants and two adrresses. The constants are the identification number and the number of jobs. The identification number labels the thread and is different for each thread, whereas the number of jobs is the same for each thread. The two addresses are the index of the next job and the work array. Because we pass the addresses to each thread, each thread can change the data the addresses refer to.

The function to generate n jobs is defined next.

jobqueue *make_jobqueue ( int n )

{

jobqueue *jobs;

jobs = (jobqueue*) calloc(1,sizeof(jobqueue));

jobs->nb = n;

jobs->nextjob = (int*)calloc(1,sizeof(int));

*(jobs->nextjob) = 0;

jobs->work = (int*) calloc(n,sizeof(int));

int i;

for(i=0; i<n; i++)

jobs->work[i] = 1 + rand() % 5;

return jobs;

}

The function to process the jobs by n threads is defined below:

int process_jobqueue ( jobqueue *jobs, int n )

{

pthread_t t[n];

pthread_attr_t a;

jobqueue q[n];

int i;

printf("creating %d threads ...\n",n);

for(i=0; i<n; i++)

{

q[i].nb = jobs->nb; q[i].id = i;

q[i].nextjob = jobs->nextjob;

q[i].work = jobs->work;

pthread_attr_init(&a);

pthread_create(&t[i],&a,do_job,(void*)&q[i]);

}

printf("waiting for threads to return ...\n");

for(i=0; i<n; i++) pthread_join(t[i],NULL);

return *(jobs->nextjob);

}

Implementing a Critical Section with mutex¶

Running the processing of the job queue can go as follows:

$ /tmp/process_jobqueue

How many jobs ? 4

4 jobs : 3 5 4 4

How many threads ? 2

creating 2 threads ...

waiting for threads to return ...

thread 0 requests lock ...

thread 0 releases lock

thread 1 requests lock ...

thread 1 releases lock

*** thread 1 does job 1 ***

thread 1 sleeps 5 seconds

*** thread 0 does job 0 ***

thread 0 sleeps 3 seconds

thread 0 requests lock ...

thread 0 releases lock

*** thread 0 does job 2 ***

thread 0 sleeps 4 seconds

thread 1 requests lock ...

thread 1 releases lock

*** thread 1 does job 3 ***

thread 1 sleeps 4 seconds

thread 0 requests lock ...

thread 0 releases lock

thread 0 is finished

thread 1 requests lock ...

thread 1 releases lock

thread 1 is finished

done 4 jobs

4 jobs : 0 1 0 1

$

There are three steps to use a mutex (mutual exclusion):

initialization:

pthread_mutex_t L = PTHREAD_MUTEX_INITIALIZER;request a lock:

pthread_mutex_lock(&L);release the lock:

pthread_mutex_unlock(&L);

The main function is defined below:

pthread_mutex_t read_lock = PTHREAD_MUTEX_INITIALIZER;

int main ( int argc, char* argv[] )

{

printf("How many jobs ? ");

int njobs; scanf("%d",&njobs);

jobqueue *jobs = make_jobqueue(njobs);

if(v > 0) write_jobqueue(jobs);

printf("How many threads ? ");

int nthreads; scanf("%d",&nthreads);

int done = process_jobqueue(jobs,nthreads);

printf("done %d jobs\n",done);

if(v>0) write_jobqueue(jobs);

return 0;

}

Below is the definition of the function do_job:

void *do_job ( void *args )

{

jobqueue *q = (jobqueue*) args;

int dojob;

do

{

dojob = -1;

if(v > 0) printf("thread %d requests lock ...\n",q->id);

pthread_mutex_lock(&read_lock);

int *j = q->nextjob;

if(*j < q->nb) dojob = (*j)++;

if(v>0) printf("thread %d releases lock\n",q->id);

pthread_mutex_unlock(&read_lock);

if(dojob == -1) break;

if(v>0) printf("*** thread %d does job %d ***\n",

q->id,dojob);

int w = q->work[dojob];

if(v>0) printf("thread %d sleeps %d seconds\n",q->id,w);

q->work[dojob] = q->id; /* mark job with thread label */

sleep(w);

} while (dojob != -1);

if(v>0) printf("thread %d is finished\n",q->id);

return NULL;

}

Pthreads allow for the finest granularity. Applied to the computation of the Mandelbrot set: One job is the computation of the grayscale of one pixel, in a 5,000-by-5,000 matrix. The next job has number \(n = 5,000*i + j\), where \(i = n/5,000\) and \(j = n ~{\rm mod}~ 5,000\).

The Dining Philosophers Problem¶

A classic example to illustrate the synchronization problem in parallel program is the dining philosophers problem.

The problem setup, rules of the game:

Five philosophers are seated at a round table.

Each philosopher sits in front of a plate of food.

Between each plate is exactly one chop stick.

A philosopher thinks, eats, thinks, eats, …

To start eating, every philosopher

first picks up the left chop stick, and

then picks up the right chop stick.

Why is there a problem?

The problem of the starving philosophers:

every philosoper picks up the left chop stick, at the same time,

there is no right chop stick left, every philosopher waits, …

Bibliography¶

Compaq Computer Corporation. Guide to the POSIX Threads Library, April 2001.

Mac OS X Developer Library. Threading Programming Guide, 2010.

Exercises¶

Modify the

hello world!program with Pthreads so that the master thread prompts the user for a name which is used in the greeting displayed by thread 5. Note that only one thread, the one with number 5, greets the user.Consider the Monte Carlo simulations we have developed with MPI for the estimation of \(\pi\). Write a version with Julia, or OpenMP, or Pthreads and examine the speedup.

Consider the computation of the Mandelbrot set as implemented in the program

mandelbrot.cof lecture 7. Write code (with Julia, or OpenMP, or Pthreads) for a work crew model of threads to compute the grayscales. Does the grain size matter? Compare the running time of your program with your MPI implementation.For some number

N, arrayx, functionf, consider:#pragma omp parallel #pragma omp for schedule(dynamic) { for(i=0; i<N; i++) x[i] = f(i); }

Define the simulation of the dynamic load balancing with the job queue using

schedule(dynamic).Write a simulation for the dining philosophers problem. Could you observe starvation? Explain.